Modern digital systems involve a wide array of voltages. Instead of just the classic 5V TTL, they now use components and busses ranging from 3.3V down to 1.0V. Interfacing with these systems is tricky, especially when you have multiple power sources, capacitive loads, and inrush current from devices being powered on. It’s important to understand the internal design of all drivers, both in your board and the target, to be sure your final product is cheap but reliable.

When doing a security audit of an embedded device, interfacing is one of the main tasks. You have to figure out what signals you want to tap or manipulate. You pick a speed range and signal characteristics. For example, you typically want to sample at 4x the target data rate to ensure a clean signal or barring that, lock onto a clock to synchronize at 1x. For active attacks such as glitching, you often have to do some analog design for the pulse generator and trigger it from your digital logic. Most importantly, you have to make sure your monitoring board does not disrupt the system. The Xbox tap board (pdf) built by bunnie is a great case study for this, as well as his recent NeTV device.

Building a mass market board is different than a quick hack for a one-off review, and cost becomes a big concern. Sure, you can use an external level shifter or transceiver chip. However, these come with a lot of trade-offs. They add latency to switching times. They often require dual power supplies, which may not be available for your particular application. They usually require extra control pins (e.g., to select signal direction). They force you to switch a group of pins all at once, not individually. Most importantly, they add cost and take up board space. With a little more thought, you can often come up with a simpler solution.

There was a great article in Circuit Cellar on mixed-voltage digital interfacing. It goes into a lot of the different design approaches, from current-limiting resistors all the way up to BJTs and MOSFETs. The first step is to understand the internals of I/O pins on most digital devices. Below is a diagram I reproduced from the article.

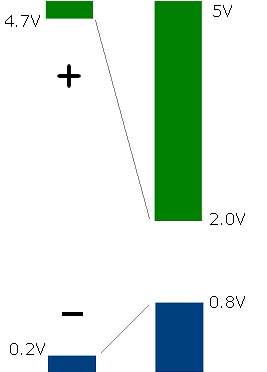

Whether you are using a microcontroller or logic chip, usually the I/O pins have a similar design. The input to the gate is protected by diodes that will conduct current to ground if the input voltage goes more negative than the GND pin or to Vcc if the input is more positive than Vcc. These diodes are normally used to protect against static discharge, but can also be a useful part of your design if you are careful.

The current limiting resistor can be calculated by figuring out the maximum and minimum voltages your input will see and the maximum current flow you want into your device. You also have to take into account the diode’s voltage drop. Here’s an example calculation:

Max input level: 6V

Vcc: 5V

Diode drop: 0.7V (typical)

Max current: 100 microampsR = V / I = (6 – 5 – 0.7) / 0.0001 = 3K ohms

While microcontrollers and 74xx logic devices are often tolerant of some moderate current, FPGAs are extremely sensitive. If you’re using an FPGA, use a level shifter chip or you’ll be sorry. Also, most devices are more sensitive to negative voltage than positive. Even if you’re within the specs for max current, a negative voltage may quickly lead to latch-up.

Level shifters usually take in dual voltages and safely isolate both sides. Some are one-way, which can be used as line drivers or line receivers. Others are bidirectional transceivers, and the direction is selected by an extra pin. If you can’t afford the extra pins, you can often combine an open-collector transmitter with a line receiver. However, if you happen to drive the lines by transmitting at the same time as the other side, you can fry your chips.

To summarize, mixed voltage interfacing is a skill you’ll need when building your own devices or hacking existing ones. You can get by with just a current-limiting resistor in many cases, but be sure you understand the I/O pin design to avoid costly failures, especially in the field over time. For more assurance or with sensitive parts like FPGAs, you’ll have to use a level shifter.