Last time, we asked the question “What is the difference between analog and digital logic?” We identified two areas: noise and dynamic range. The latter limitation is that you can’t have an infinite (or even very high) voltage, so analog logic will always have some maximum value it can represent. With digital logic, you just add more bits to your representation and you can instantly increase the range. This time, we’ll consider noise and analog computers.

The first computers were analog. One of the initial applications was to calculate artillery trajectories under different conditions. The old way to fire a gun was to measure the wind (strength and direction), distance, and elevation to the target, and then look up a number in a table to get the appropriate angle. Originally these tables were generated by test-firing guns and then deriving the variations with calculations done by hand. People who did this work were known as “computers”.

Analog electronic computers were applied to this task in World War 2. This kind of computer worked with a continuously varying voltage, using op-amps to calculate sums and differences of values. The advantages are that the results are highly accurate and computation is very fast. An analog computer does not suffer from the quantization problem or aliasing because the signal is continuous, not discrete like digital sampling.

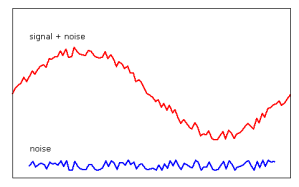

We’d still be using analog computers today if it wasn’t for noise. The same advantages to working with a continuous analog signal mean it is vulnerable to cascading noise. On every pass through an op-amp, a small amount of noise is added. At some point, the signal is not recoverable from the noise and the calculation can’t proceed. Much of the more recent work on analog computers seems to be focused on filtering noise.

As we saw last time, there is no such thing as a purely digital computer. “Digital” is a point-of-view, a way of interpreting an analog signal of some kind. This is both simple and sublime. It means that we can overcome the advantages inherent in our analog world by moving up a level of abstraction — manipulating symbols and abstract logic instead of concrete and continuous substances such as a voltage.

Digital logic was designed to have the regenerative property. What this means is that each stage of processing starts over from scratch, generating its own signal that was only dependent on the meaning of the previous stages of logic and its computational result. The key thing to understand here is that it is not amplifying the input signal directly, it is regenerating the output by switching a set of transistors. This means that digital logic stages can be infinitely deep and the signal at the end is as free of noise as it was at the start.

To maintain the regenerative property, several rules have to be followed. Since transistors work with continuous voltages and can be considered an analog component, digital logic rules state how they must be used. Each type of silicon family (CMOS, TTL, LVTTL, ECL, GTL) has its own particular rules. One set of rules deals with timing — how fast the input voltages can change and how long they have to remain at a given level to be registered as a logical 1 or 0. Another rule gives the voltage levels for outputing or receiving a logical 1 or 0.

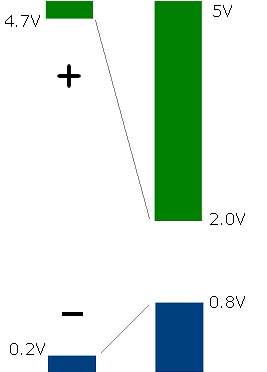

The rules for input and output voltage levels differ, which is key to noise rejection. To maximize noise rejection, you want your output levels to be as far apart as possible but your input levels closer. This handles noise created by behavior such as ground bounce. If your input levels are too close together though, you’ll get false readings due to noise or intermediate input voltages (low slew rate). The difference between logical 1 and 0 is called the noise margin and is measured in volts.

Here are the specified voltage levels for TTL. Output is on the left:

The diagram shows that outputs must have at least a 4.5V difference between logic levels and the inputs have a 1.2V difference. For example, if you have a noisy input when the sender transitions to logical 0 but the noise peaks are less than 0.8V, you’ll still get a clean logical 0 from the output (0.2V or less).

Manufacturers design these tolerances into their components. If you also follow the timing rules, your digital logic will have all the desired properties: noise rejection, the regenerative property, and arbitrarily large dynamic range. This is what people actually mean when they talk about the reliability of digital data and processing.

By the way, different logic families have different thresholds. The output response over a continuously varying input voltage is called the voltage transfer characteristic. It indicates the S-curve of output voltage for a gate as the input varies. Another way of interpreting the above diagram is that by staying to the far left or right of this curve, we avoid the transition region and thus can reject more noise.

It’s often enlightening to step back and consider the assumptions underlying our everyday work. Given that we live in an analog world, I find the ideas and unique guarantees digital logic provides fascinating.